How to fine-tune GPT-4o for industry-specific document processing and robotic process automation

How Automat is using OpenAI's vision fine-tuning to enhance IDP & RPA.

Lucas Ochoa

10.1.2024

Share:

Thanks to OpenAI's new vision fine-tuning, we are now able to demonstrate promising quantitative results that increase accuracy for both: intelligent document processing (IDP) and robotic process automation (RPA).

Read more about the launch and OpenAI's article featuring Automat here

Automat builds enterprise software automations using AI. We use agents to autonomously create and manage complex workflows, making them 10x faster to build and maintain than traditional processes. Here’s how we to use OpenAI’s vision fine-tuning to supplement and enhance our software:

- Industry-specific document parsers: Custom intelligent document processing (IDP) models trained with proprietary customer data improve accuracy and reduce development time.

- UI-based automation building agents: New agent-driven workflows for Robotic Process Automation (RPA) on Web and Windows native apps accelerate the build process on legacy systems.

Use Cases:

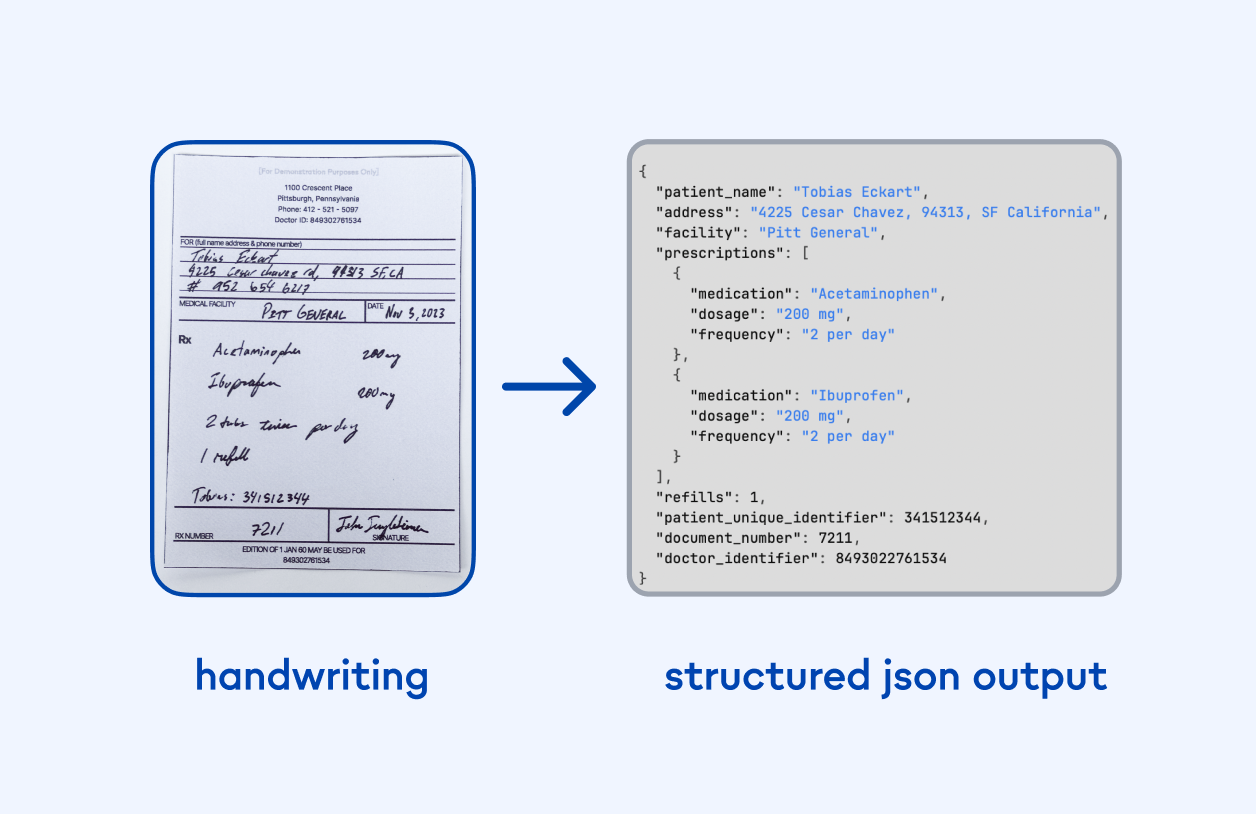

At Automat, we leverage vision models to build bots that streamline our customers' operations. Our bots perform actions by navigating GUI software systems, as well as understanding and extracting information from unstructured documents. Many of our enterprise customers process millions of physical documents each year, and go on to build UI-based automations.

For our case study, we used an anonymized dataset of unstructured documents to benefit one of our customers, a Fortune-100 insurance company. We trained a combination of traditional and transformer-based IDP models to analyze and extract information from medical documents such as prescriptions. Below, we highlight the differences and advantages of end-to-end systems, enhanced by GPT-4 vision fine-tuning.

Once data is extracted from the relevant documents, RPA automations begin to reconcile and input the information into legacy internal systems and third-party websites that lack accessible APIs. Traditional RPA methods have several limitations, detailed in the examples below. We conclude that vision fine-tuning, even on a small dataset of interfaces can significantly accelerate the RPA build process.

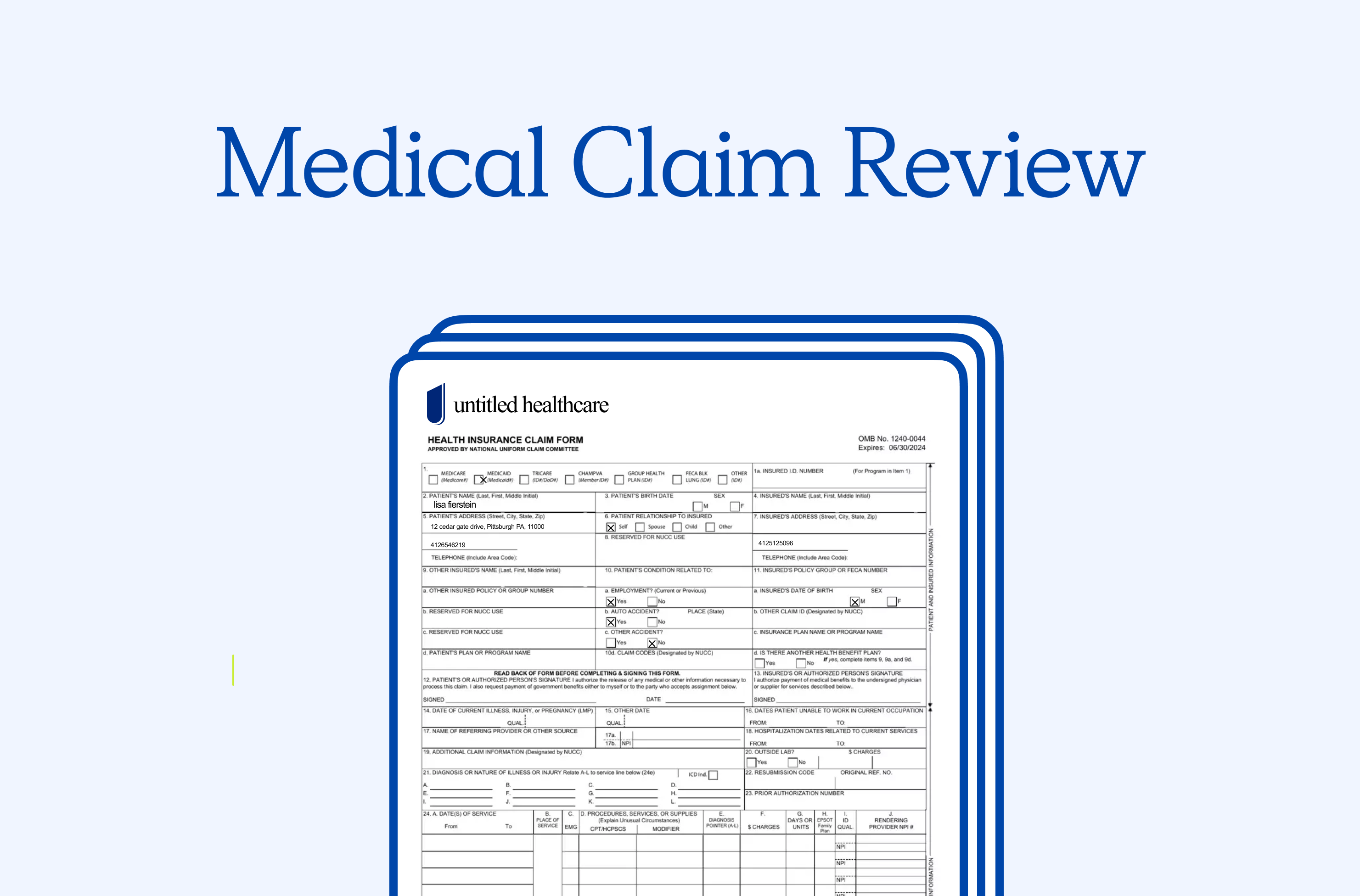

Intelligent Document Processing (IDP)

We used a mix of complex digital and handwritten health insurance documents (>15 schema fields with recursion). The evaluation is based on similarity between our ground truth dataset of manually labeled documents. Looking forward, we are certain that GPT vision models can surpass the performance of traditional IDP systems due to their end-to-end design. This allows the model to capture the relationships that exist within industry-specific data.

Robotic Process Automation (RPA) Software Agents:

Historically, RPA software development has been slow to build and challenging to maintain. The improved accuracy and reliability of models like GPT-4o, with advancements in multi-modality and structured outputs, have enabled new agentic applications. In many cases, customers need to run automations on a virtual desktop, restricted to video streams and mouse/keyboard input. This type of automation involves targeting UI-elements by their screen position, which can be tedious and unreliable. Additionally, using fixed coordinates is not robust as the position can vary due to factors like software updates, changing UI, and monitor resolution.

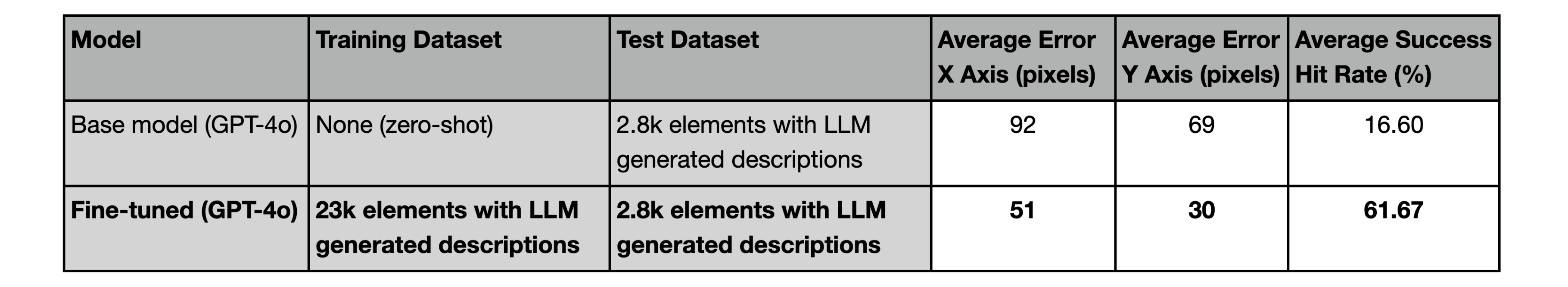

Vision models like GPT-4o struggle with tasks that require precise spatial localization. We evaluated the performance of GPT-4o (zero-shot) to estimate the center coordinates of a UI element from a text description. Then, using a dataset of website screenshots, we fine-tuned the base model improving the success hit rate by 272%.

Looking ahead

We are confident that a high-quality dataset can achieve human-level performance. We are excited about the potential of text-to-coordinate agents and reasoning/planning agents like GPT-o1. This opens the door to end-to-end RPA automation, significantly improving efficiency of our company and paving the way for new opportunities with our existing and future customers.

Authors: Perdo Martinez Lopez, Aaron Bannin, Gautam Bose, Shareef El-Sayed

[button] Get in touch [button]